Making AI assistants people will be excited about

Intuitive. Social. Trusted. Nurturing. Multimodal.

Some of these aren't words we hear a lot about AI, particularly not in online conversations. Instead, when we conducted an experiment to synthesize general sentiment about AI, trends, and emerging AI case studies, we found a mixed review.

Posts about the rabbit r1, HumaneAi pin, and other AI assistants offer enthusiasm, but also skepticism and concern. Popular technology Youtuber Marques Brownlee titled his review of the Humane Ai pin “The Worst Product I've Ever Reviewed... For Now.” But some commenters were really positive. One wrote, “If someone can make an AI OS that works like this rabbit r1 demo, there’s another empire waiting to be built.” On Character.ai’s TikTok account, people most frequently commented about feeling an obsessive love for the app, or even an addiction.

Different people have different needs and expectations, and the future of AI is not one-size-fits-all—or even custom in the way we understand it today. Instead, it will be defined by the proliferation of personalized, adaptive, and specialized solutions to a broad range of needs defined for each individual user. Today, users turn to AI assistants to explore, with an understanding that the outputs need to be reviewed with a critical eye. Widespread adoption of AI assistants in everyday life will require that users can have confidence in their output and actions.

To succeed, we believe these products will need to be five things—intuitive, social, trusted, multimodal, and nurturing.

With some inspiration from products we admire on the market, and signals about what’s coming, let's explore some early concept provocations for how each of these principles could make AI feel great to use and deliver on its promise—in the near-term and farther down the line.

Intuitive entry points

Technologies that rely on AI promise to streamline our lives, but the current AI landscape is overwhelming. From choosing the right tool for a task to learning how to properly prompt it, the barrier to entry can be daunting for casual consumers. AI is ripe for an evolution into truly intuitive modes of interaction that make it easy to use and accessible for everyone.

Near vision: An AI platform that bridges multiple AI providers and tools with an added layer of automation that picks the optimal toolset and flow for a user.

Products like Perplexity AI show early visions of this style of simplified entrypoint, by offering several large language models (LLM) as options in their search tools. Their focus on mapping sources of data allows users to further explore information, pointing to a future where the AI can take further steps within that data for the user.

Far vision: A product that serves as the conductor of an orchestra of specialized AI agents that work together to accomplish complex tasks on behalf of the user—with minimal prompting and no need for detailed instructions. It would have holistic platform integration through a dedicated app, Siri, Hey Google, text, phone, or email, and be able to do things like schedule vaccine appointments for the whole family.

Inspiration: Forethought, AI-first customer support service; Simplified, a specialized AI suite of marketing and collaboration tools; and Arc Max, an AI-powered web browser that seamlessly integrates LLMs and AI tools with the website browsing experience.

Social goals

Across our work, we are seeing that people are more connected to technology, but less connected to ourselves and to others. AI technologies are a unique addition that can help people engage with technology in healthier ways. Instead of adding to the digital noise, new AI tools should help people engage with technology in ways that align with their values and lead us to healthy human connections.

Near vision: Opt-in AI assistants that use the data already on our phones to offer nudges and assistance on actions that support their social goals. They can do things like check users’ step count and the weather, to nudge them to get outside with friends on a sunny day.

Far vision: An AI-powered journal that acts as a digital mirror. It gently nudges users toward their goals and helps them reflect on their day. They decide what data to share with it and which areas of their lives they want it to help with. It can provide support by, say, telling users it’s proud of them for chatting with their mom today when that was a hard thing to do six months ago, and offering to send her flowers as a follow-up.

Inspiration: Character.ai, which is attracting a younger demographic with its offer of human-sounding virtual characters that offer social enrichment and companionship; Journal, Apple’s new iPhone app, which helps users reflect and practice gratitude; Hume AI’s Empathic Voice Interface (EVI), which interprets emotional expressions and generates empathic responses; and ETHIQLY, which is a startup IDEO helped create that aids teachers as they scale (and deepen) connections with their students with the help of generative AI.

Trust and safety

We are living in a data-driven world, where fear and skepticism of AI and fair data use prevails. For users to truly embrace AI, it needs to move toward building trust in the safety, accuracy, and intentions of these applications. Only then can all people use them with confidence.

Near vision: Governance projects that scale safety and compliance solutions for business and consumers. When users interact with a new AI tool, they can see the safety rating and a detailed report, as well as flags about AI-generated content.

Far vision: AI models are subject to a robust AI safety pipeline before being used by people. Once approved, consumers can feel confident that their data and accounts are secure and information provided is true.

Inspiration: Credo AI, which provides an intelligence layer that tracks, assesses, reports, and manages AI systems to ensure they are effective, compliant, and safe; IBM’s investment into R&D on human-centered AI (HCAI) solutions that amplify and augment human capabilities; and Anthropic, which provides visibility into its models and oversight into AI governance.

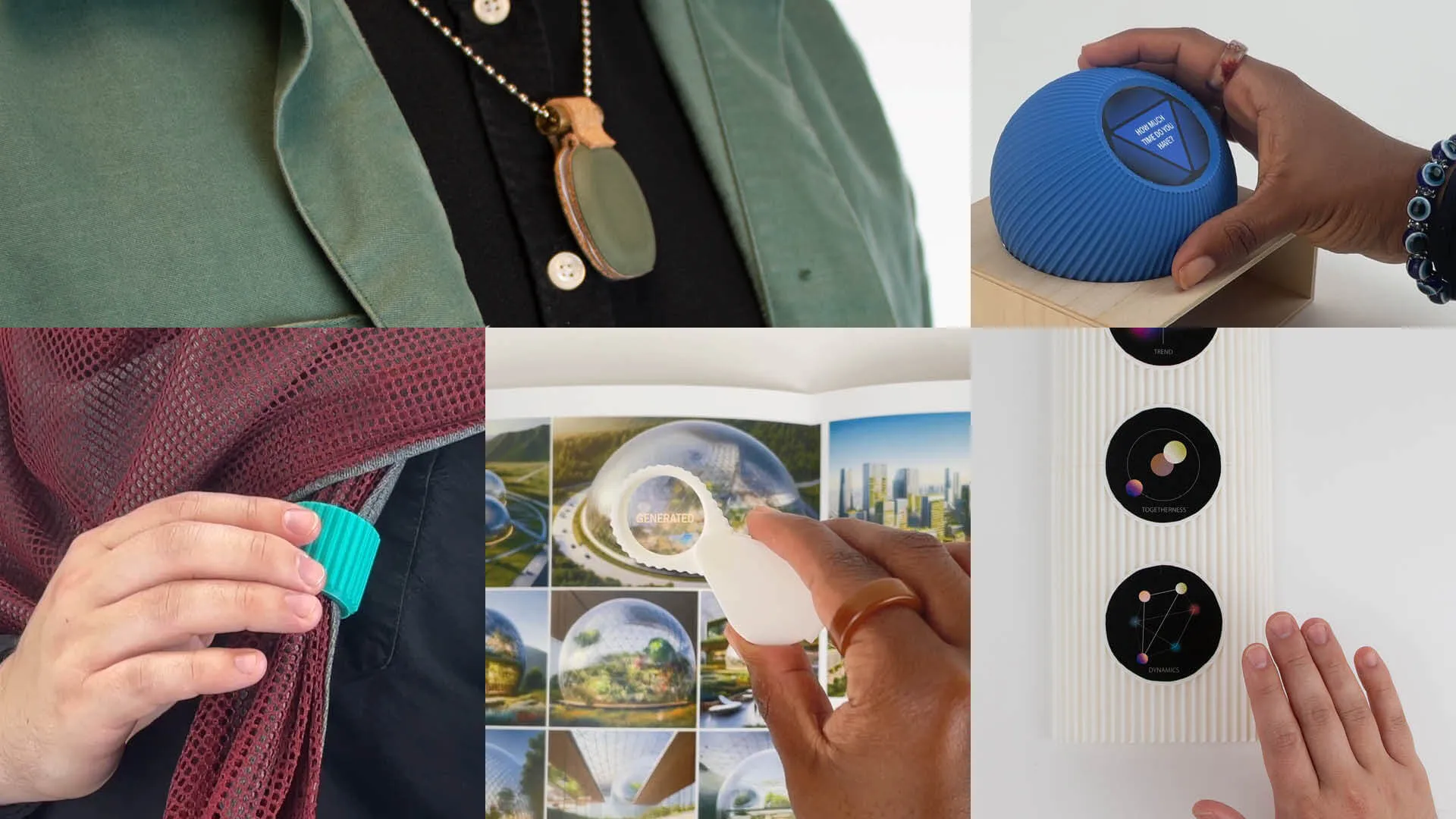

Multimodal interactions

We are already seeing significant advancements toward multimodal AI, with OpenAI’s GPT-4o and Gemini, but most AI technologies still rely on voice and text AI interactions, which limit the richness and convenience of inputs. We believe the ideal future AI technologies will offer a variety of less disruptive ways to interact with and seamlessly integrate into humans’ daily lives, through visual, tactile, and even emotional inputs that create an intuitive synergy between humans and AI.

Near vision: For work, build on existing AI Assistant services of call summary and call interpretation by providing live call outputs that include a mix of audio, visual, and text summaries.

Far vision: Wearable and ambient tech powered by AI assistants that are made aware of the user and their surroundings through sensory and multimodal input, allowing for short and seamless prompts that enrich their life on-the-go. Imagine being at a networking event and getting a reminder of a name or face you might have forgotten.

Inspiration: The AX Visio AI-powered binoculars, which blend analog viewing with AI for bird and wildlife identification; Fireflies, which joins your meetings to automatically take notes, capture, and transcribe voice conversations across various platforms; rabbit r1 and the Humane Ai pin, which aim for a more intuitive and app-free online experience; and MIT-developed technology that translates subvocalization signals to words.

Nurturing and wellbeing

Content generation and execution of simple tasks are just the beginning of what AI can help us with. Future AI solutions will need to be able to keep track of the never-ending list of to-dos involved in managing our current busy lifestyles—so we can have time to care for ourselves and each other.

Near vision: A sleep device company creates wake up and sleep routines customized to personal calendars, health needs, and family routines. Picture a smart speaker and light that calibrates to your bodily needs, supporting your sleep and wake up routines, to help you get a great night’s rest before and after any day.

Far vision: An AI assistant integrated in your household that supports smart devices and communication channels for the entire family. It can order groceries and craft recipes based on everyone’s preferences, schedule maintenance, book catering for birthdays—anything a busy household needs. When you need some off-AI time, all you have to do is unplug it.

Inspiration: Aura, a specialized machine-learning platform that can be paired with ordinary microphones and understand more than human voices—like dogs barking, babies crying, and doorbells; the “mui Board Gen 2,” a Kickstarter project for a smart home hub; and Assistant with Bard, which combines Assistant’s capabilities with generative AI to help you stay on top of what’s most important, right from your phone.

These provocations are driven by our research and signals we’re seeing in the world. For us, the next step to creating human-centered AI products is testing the value of lo-fi prototypes with real users, to find out which products or features are worth our development resources and which map to true needs. After all, we’ve seen companies in the space rush to build a product and get it out the door, before they even test it with potential users. You can make something that seems super futuristic only to find out that no one wants it, or that it can’t really do what you promised. Forty years in, we’re big believers in the power of human-centered design to help us craft audacious futures with technology that people actually want.

Words and art

Subscribe

.svg)