Veggie Vision: Designing the Kitchen of the Future

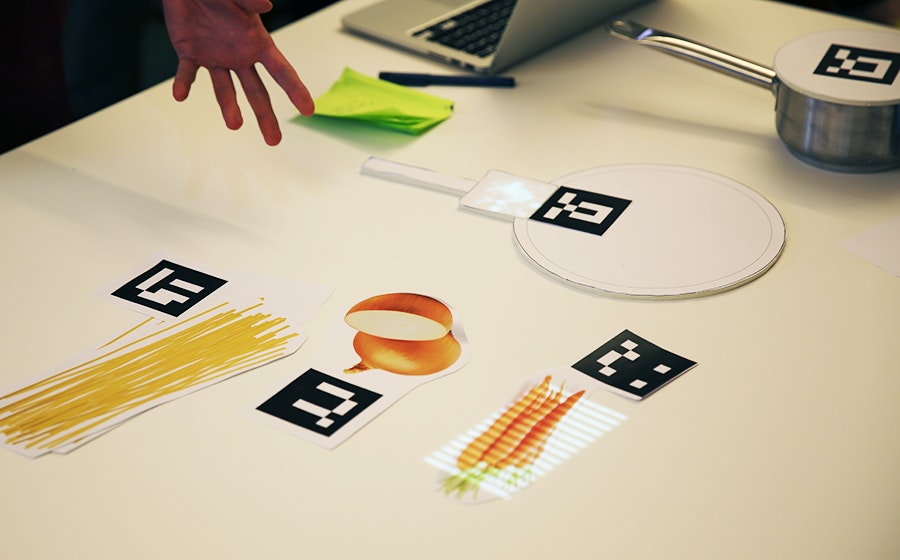

What will kitchens be like a decade from now? IDEO recently helped IKEA envision some wild ideas that will likely be entirely plausible—someday. Usually, predictions like this exist only on screens, as concept videos and digital renderings. But this time, IKEA asked IDEO to build a working prototype of one future concept: the Table for Living, a cooking and work surface that recognizes the foods you place on it and projects customized recipes and cooking tips right onto the tabletop.

Taking this 2025 appliance from concept video to functional prototype meant creating a realistic experience of technologies that won’t exist for many years. Designers had to come up with a way for the system to see and recognize foods on table’s surface and respond accordingly—and they had to make it work reliably for more than six months while some 250,000 visitors tried it out at pop-up exhibitions at the Salone del Mobile and EXPO Milan.

To find out how they did it, I talked to IDEO London interaction designer Siri Johansson and developers David Brody and Kalyn Nakano of IDEO Palo Alto, who built custom software that borrows techniques from computer vision.

How did the need to build a prototype for real use affect the interaction design?

Siri: As soon you put something in a public setting, people are always going to try to break it, especially if they’re really excited about it. They don’t mean to break it, of course, but they want to play with it and see what it can do. We had to focus on a few key functions and figure out how to make them work well enough that it would be feasible for people to play freely with the installation. A key design principle was to keep the technology low-key and in the background, to preserve the warmth and tactility of the kitchen, so we focused on functions that demonstrate that intention and show how the table uses digital to free up your mind so you can engage with physical things. The table knows what I’m cooking and gently guides me through the process, so I don’t have to keep going back to my iPad to browse a recipe. I can focus more on my ingredients—how they feel, how they smell.

How did it affect the software design?

Kalyn: Knowing this was going to be a live prototype forced us to consider every scenario. With on-screen prototypes, predicting how a user might interact with the screen is a lot more straightforward. But here, adding another dimension and more objects to the mix challenged us to consider all the different ways people could position and interact with the items on the table. Once we started building, it became clear that the concept video for the design was really just a starting point. Testing all the different arrangements and permutations challenged some of our initial designs and software implementation methods, especially when we started thinking about transitions. Tracking objects while they were moved around on the table forced us to implement things more formulaically to achieve a display with smoother transitions between states.

How did people’s interactions with early versions of the table change the software?

Kalyn: With a live prototype like this, you give up control over the experience. You can’t send everyone down the path you want them to take. We started building and testing our software based on the interactions shown in concept video, but those interactions are choreographed, and when people in the office started playing with the prototype, we realized the behavior in the video—putting the veggies down on the table and sliding them from place to place—wasn’t natural for everyone. Some people would hold the vegetables in their hands or lift them off the table while deciding where to put them. These interactions weren’t wrong, but we hadn’t accounted for them in our initial designs. The need to accommodate for this forced us to reconsider our image processing techniques to make sure we could still recognize the objects no matter how they were being handled.

How did you adapt the CV software to the specific needs of the project?

David: Our approach wasn’t to make CV that could be used for anything, in any situation. The better route was to take advantage of our constraints for the exhibition and build a simplified version of CV (written with openFrameworks and OpenCV) that we knew could be tractable and provable in the time we had. The table has to consistently recognize three ingredients—broccoli, tomatoes, and rice—so that people can experience how it works. While more complex CV might create models of what generic broccoli looks like, our solution uses examples of broccoli that it has already seen, along with shape and color distribution. It compares those factors with what it’s seeing in order to distinguish between objects. The distribution of color helps it distinguish between broccoli and a blue bowl of rice, while comparing with previously seen examples help it distinguish between broccoli and a green shirt sleeve.

Why was this an efficient strategy?

David: A lot of conventional CV uses a “sliding window” approach: The computer identifies an aspect of an object, like its shape, and looks for that object within a larger image by sliding the window of vision until it recognizes something that matches what it’s looking for. The hard part is making it work in real time, because the computer compares every possible “window” that contains the criteria it’s looking to match, and each comparison takes time. Since our software is only looking at colors, it’s easier to identify which windows on the table might contain the objects it’s seeking. So the first thing it does is look for the points on the table where the intensities of color are the highest, and it says, “these might be objects.” It sends that information down the pipeline to the next step, and that’s where the color-recognition algorithm comes into play: It compares the histogram of a potential object with the histograms for broccoli, tomatoes, and rice, and determines which object it’s looking at.

What was it that excited you about this project?

Kalyn: This was a great example of building to learn. Creating a live prototype introduced a lot of interesting interaction design questions into the software development process, and it got us to solve technical challenges that wouldn’t normally come up during a screen-based prototype. It generated a new excitement for designing dynamic and thoughtful off-screen experiences, and watching people’s reaction to it was inspiring. When we set the table up in Milan, we came in the next morning and found construction workers playing with it, saying, “bellissima!”

The IKEA Concept Kitchen 2025 was on display at IKEA Temporary, a pop-up exhibition at Salone del Mobile in Milan, Italy, April 14-19, 2015 and can be seen at EXPO Milan, May 1-October 31, 2015.

Read more about Veggie Vision and the entire Concept Kitchen on Tech Insider and at IDEO.com.

Words and art

Subscribe

.svg)