No Beards Allowed: Exploring Bias in Facial Recognition AI

Artificial intelligence and machine learning are among the hottest topics of the tech world, but some of us at the frontier forget that many people feel intimidated—or even afraid of—these technologies. The pop-culture trope of machine intelligence gone rogue has been a part of our cultural psyche for a good 150 years. Think 2001: A Space Odyssey’s HAL 9000, The Terminator’s Skynet, and The Matrix’s... Matrix. Combine that fear with arcane tech-speak like “recurrent neural network” and “generative adversarial network,” and in addition to feeling emotionally intimidating, AI and ML can also feel incomprehensible.

As a user experience and interaction designer with a hybrid art and tech background, I’ve long been a fan of the concept of using art and design as a Trojan horse to make tricky concepts accessible. Through intrigue, curiosity, and play, these experiences can bypass our biases and open the door to understanding, without requiring deep comprehension. If you’ve ever visited a children’s science museum and watched kids excitedly engaging with subjects that would bore them to tears in book form, then you’ve seen the power of experience and play at work.

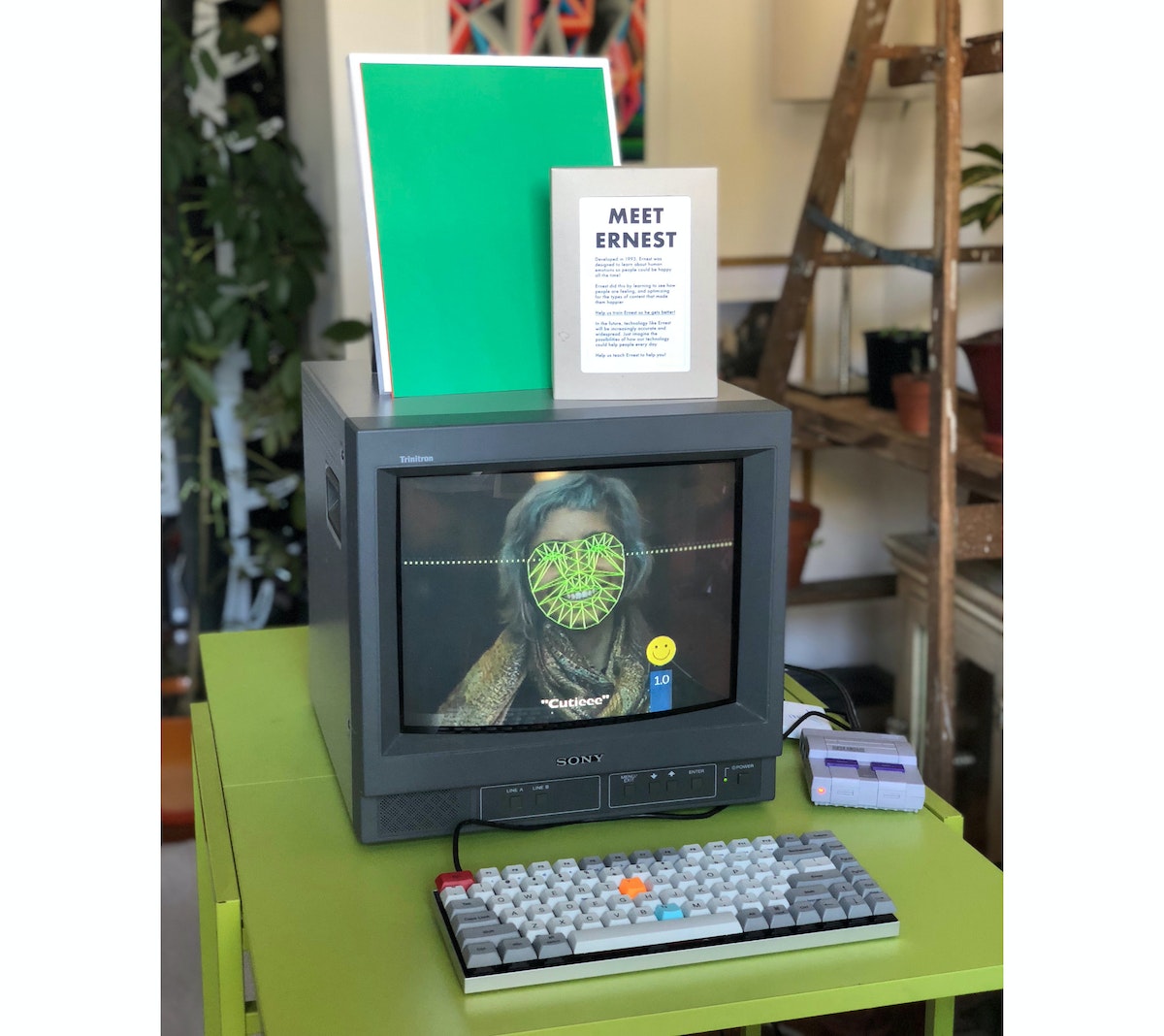

Meet ERNEST

That’s why my colleagues and I created ERNEST, an installation that gives the viewer the job of training a rudimentary artificial intelligence. Using facial and emotional recognition tech, ERNEST shows the user imagery and tries to see how happy it makes them. Our goal for this project was to engage users in a game-like experience to help them understand how machine learning models are trained, and subtly hint at the areas where bias could creep in.

Fellow IDEOer Matt Visco and I wanted to make sure ERNEST didn’t look like a modern computer—we designed it to look vintage. With its old hardware and nostalgic graphics, ERNEST doesn’t feel smart on the surface. Its look is meant to evoke a time when computers carried the promise of a utopian future, prior to mass connectivity and the ubiquitous collection of personal data.

The full installation uses five monitors, two computers, and three Raspberry Pis, which power several video loops that reveal the training data behind ERNEST, and give passersby a sense of the experience even if they don’t engage directly with it.

How it Works

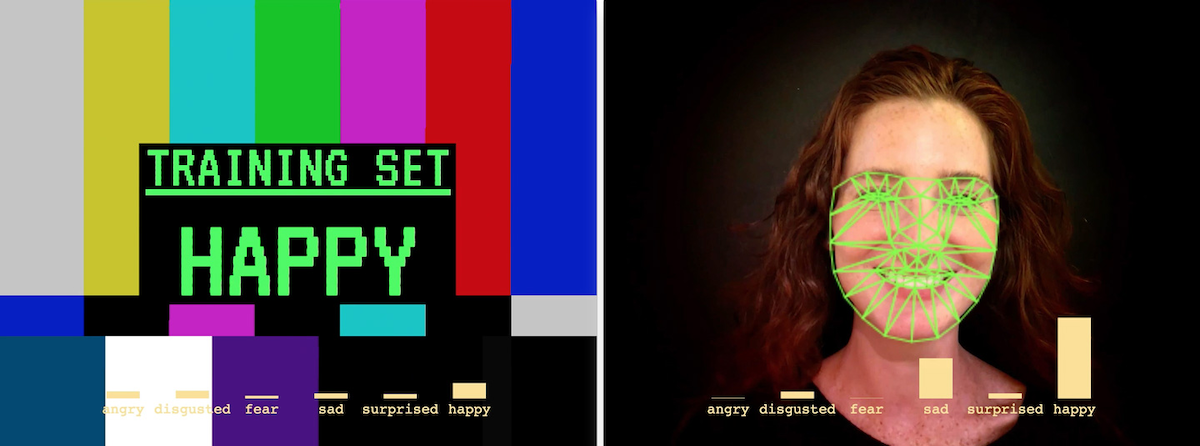

ERNEST uses computer vision to perceive the user ambiently through facial recognition, allowing it to detect when a user is looking at it and how far away they are. When a user gets close enough, ERNEST comes alive and asks the user to help it learn. It then shows the viewer images intended to make the user happy, and gauges their emotional reaction based on their facial expression using a pre-trained model. The facial tracking and emotional data is displayed to the viewer in real time, encouraging users to make faces at ERNEST. Viewers can then validate the accuracy of the model to help ERNEST know if it was correct or not.

(Sound complicated? The video below might make it clearer.)

We installed ERNEST in IDEO San Francisco’s lobby for a couple of months, and also brought it to the San Francisco Art Institute Fort Mason Gallery for the CODAME Intersections exhibition in November 2018.

The Results

Many participants loved to challenge ERNEST, often emoting in varying ways through numerous loops of the game. Once, a group of participants spent nearly 20 minutes playing with ERNEST, takings turns to compare how it perceived each person.

When viewers interact and play with ERNEST, we’re helping them see that machines can have almost human-like perception, reacting differently to different faces, and sometimes being uncannily accurate...or completely wrong. The wrong guesses were in many cases more interesting, and were in fact a very intentional part of the design. We wanted users to see that artificial intelligence is sometimes not as intelligent as we might think.

ERNEST is meant to be a game, but what happens when real-world AI makes the wrong guesses? Or what if those wrong guesses are uneven, indicating an unintentional bias, such as working well for some faces but not others?

We wanted people to realize that designing systems that can ambiently perceive humans without direct input is rife with ethical concerns. And already, systems like these are being deployed at scale. The Guardian predicts emotionally-aware tech is already a $20B industry. Even something seemingly benign like ERNEST causes us to wonder—if an AI is trying to make the viewer happy, who determines what “happy” is? What if bad actors train Ernest to think that disturbing content is “happy?” What if the technology is repurposed to provoke anxiety or anger? What happens if we deploy technology like ERNEST widely?

Of course, ERNEST only scratches the surface of these big ethical questions, but we hope that it helps give people insight into how artificial intelligence thinks, and the ways its thinking can be flawed. The more that we as a society can start to understand these systems, the better we can judge their quality, and work to design them to be in line with our shared human values.

Words and art

Subscribe

.svg)