Creating a Customized VR Experience

As a designer, I am fascinated by exploring new ways to interact with virtual reality. Technology is able to trick our senses like never before, and we’re starting to see VR hardware like HoloLens and the HTC Vive really push things forward by allowing meaningful interaction between the real and the virtual worlds. One possibility I’ve been experimenting with is how we can create environments that can adapt to our emotional and psychological state.

My main objective is to influence a user’s mood through automatic customization of virtual worlds—a tool that could, for example, help improve existing VR treatments for problems like phobias and anxiety disorders by automatizing the intensity of the experience. If the system were able to gauge an emotional reaction, it could ease participants into facing their fears. The same capability could be used to test different environments for mindfulness exercises, or even as a design tool, to help determine which designs elicit desired reactions.

So far, most of the innovation we’ve seen in this space has come via proprietary systems like the HTC Vive controllers. But those kind of systems are very standardized and don’t allow users to experiment much with sensors. With that in mind, I built a platform with Unity and Arduino that would enable me to explore new interactions within VR environments, and allow other makers to test their ideas, too. I’m a big believer that as hardware and other tools become more accessible, makers will dig in and uncover even more immersive VR experiences.

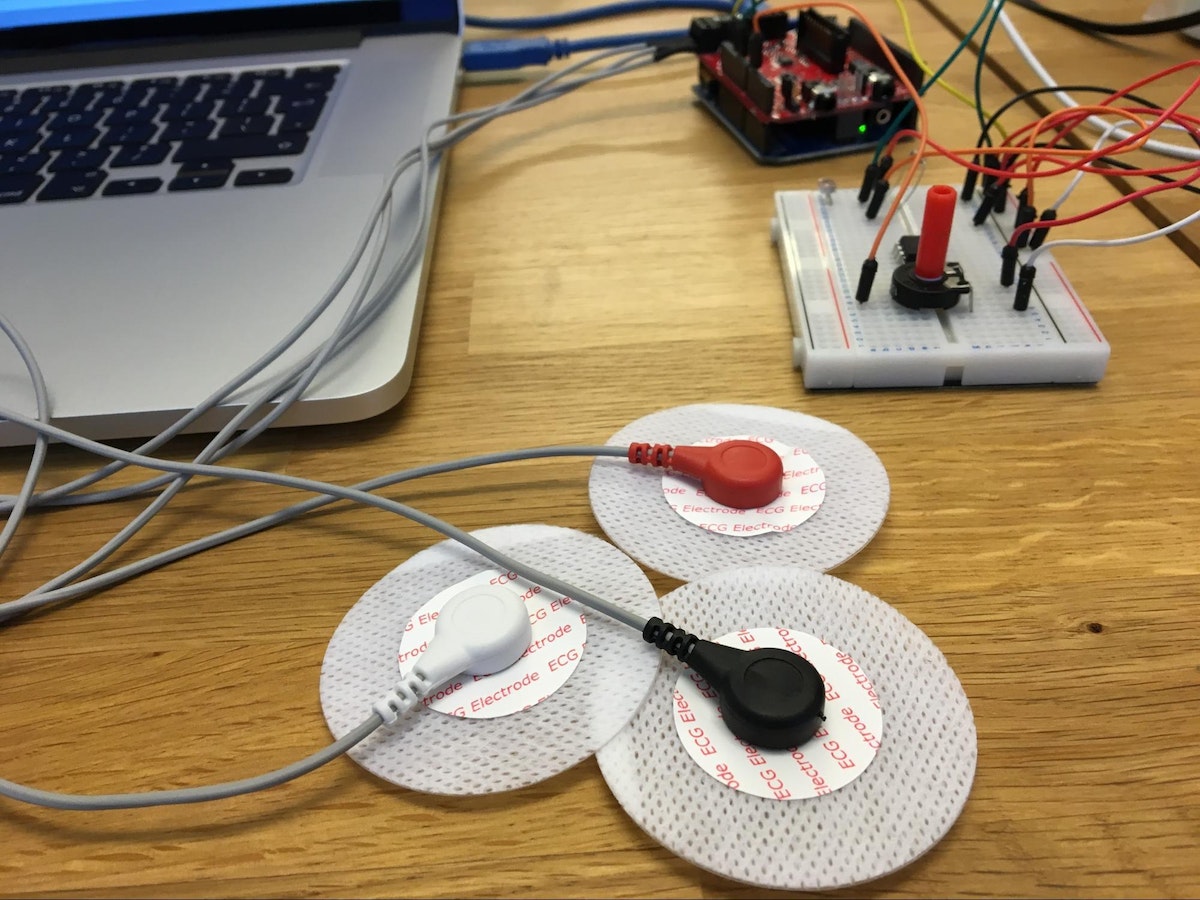

Using Unity and open source development hardware, I created an atmospheric space that can change depending on the user’s biosignals, as well as the amount of pressure they apply to sensors. To build it, I used Arduino and the eHealth platform, which can process the signal from a user’s heart rate, electrodermal activity, or breathing. In this case, I used the ECG sensor reader and developed a little piece of code that would translate the voltage signals into beats per minute, which were then sent directly to Unity via Serial. In Unity, I wrote a script based on Dyadica’s open code that constantly reads the input from the sensors and updates the scenario in each frame. For example, when the heartbeat goes up, the light in the virtual reality world changes to a more soothing blue; when it goes down, the illumination flashes and changes to red. The idea behind this particular interaction is to test whether changing color or lighting patterns will affect the user’s mood, either calming him down, or further agitating him.

The results from these experiments were not completely satisfactory. Our users did acknowledge that changing the colors in the virtual world influenced their state of mind, but the changes in their biorhythms were small. One of the limitations of the system is the fact that heart rate is not an ideal indicator for mild changes in physiological activity. Electrodermal activity could provide a more accurate measure of comfort or distress, and we may turn to that in in future iterations.

In another set of experiments, we used light and pressure sensors to change environments. In one virtual environment set on the coast, the light changes based on the light detected by a sensor set in the real world. Meanwhile, the size of the virtual space changes depending on the amount of pressure a participant applies to a sensor that is set in the same place on a wall in both the real and virtual worlds. The harder the user presses the wall, the smaller and more claustrophobic the virtual space will become.

We’ve also been playing around with creating uncanny experiences. I started by 3D-scanning Rich Cahill, our newest interaction designer, then placed his 3D persona inside a virtual world. We then used a Leap Motion attached to the Vive headset to increase the immersive experience by allowing users to see their own hands in the virtual world. Then, we had Rich stand in the same place as his 3D model. When Rich raised his hand to touch his (virtual) face, he felt the warmth of his (real) skin under his fingers. Every single person who agreed to do the experiment found it unsettling. It was also really fun to make, and I learned a lot about how to replicate real objects and people in the virtual environment.

Right now, these enhanced interactions are just small steps towards a richer, and more immersive virtual experience. But I’m hoping this platform can be a template for other people to build their own interactive VR experiences. As more and more people dig into the technology, I'm sure we’ll see an explosion of creativity mixing the real and the virtual worlds—and maybe even creating new valuable hardware and platforms. Soon, instead of being passive characters in a prescribed world, we will be able to change our virtual environments to fit the state of mind that we would like to be in, and help ourselves relax, or even find inspiration. That’s when things will really start to become mind-blowing.

Words and art

Subscribe

.svg)