The Rules of Brainstorming Change When Artificial Intelligence Gets Involved. Here’s How.

Back in early August, I opened my laptop and kicked off a remote brainstorm. Immediately, my partner started generating a huge number of ideas—a perfect blend of wild, hilarious ideas and pragmatic solutions. That's not unusual at IDEO, but what made it remarkable is that my partner wasn't just remote—it wasn’t human at all.

My “partner” that day was a technology called GPT-3 from OpenAI. You may have heard of it already; if not, you can think of GPT-3 as a really good autocomplete. Like, shockingly good. Once I shared some of the output of my brainstorm with others on Slack, there were plenty of 🤯reactions—and honestly, our minds are still blown. But clients and design teams will need to tread carefully and watch their ethical compass as they imagine inspiring, human-centered applications for this new tool. As with any new technology, there are new risks to account for. Here's how I started my experimentation.

How to run a virtual brainstorm

In order to work with a so-called “transformer model” such as GPT-3, you feed it a pre-written prompt, then the model uses everything it's seen before (in this case, a substantial portion of the internet) to guess what should come next.

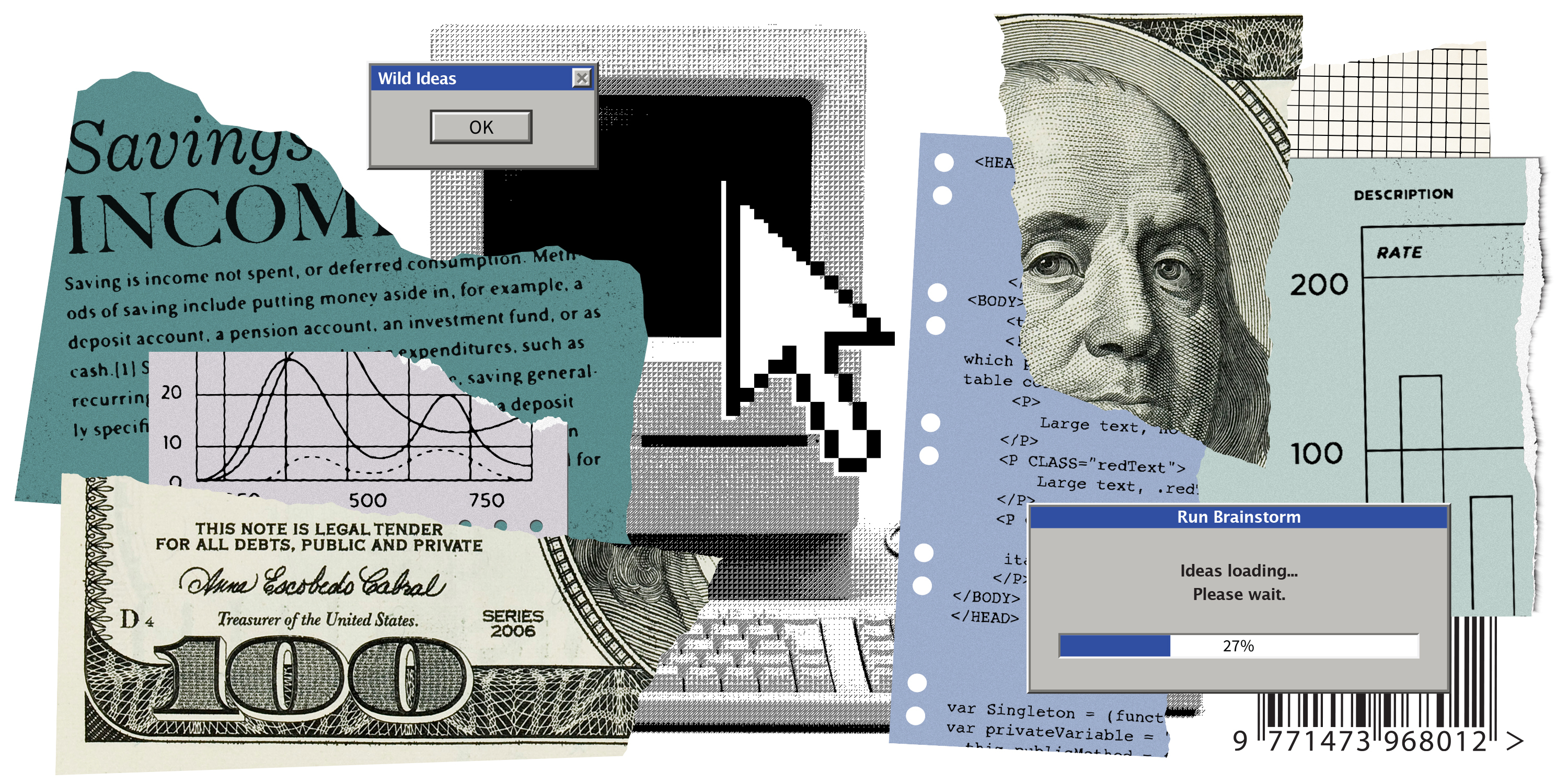

I wrote some context: This is an IDEO brainstorm, which follows our 7 Rules of Brainstorming, including “encourage wild ideas.” I gave GPT-3 a real client challenge from a past project: The team is developing a new app for a bank to encourage millennials to better utilize savings accounts. Then I wrote a brainstorm prompt: How might we help young people turn saving money into a lifelong habit?—and fed it five example ideas.

As soon as I pressed submit, the model began churning out new ideas. Not all of them were gold, but many were perfectly good brainstorm concepts. After all, the rules also tell us to “defer judgment” and “go for quantity.” Without cherry-picking too much, here's a sample of some of the ideas it generated:

- Allow the user to share their saving goals with friends and have a community of people who will cheer them on or challenge them if necessary

- Reward the user with real money if they don't spend money at all in a month

- Allow the user to set up “fun budgets” (aka “triggers”) to save more money, e.g. whenever they go to Target, they'd get a pop-up asking if they'd like to put $5 towards savings

- Similar to the Future View idea, show how much more could be saved if the user decides to forgo a coffee every day or splurge on new sneakers instead of taking an extra vacation

In the last example, you can even see the model “build on the ideas of others”—another rule of brainstorming—by riffing on an idea called Future View that I had given in the prompt.

This stuff is on par or better than some of what I've seen from humans. Some [of it] is worse, too. But that anything is better is wild.

An IDEO Director on GPT-3's brainstorming output. Watch out, humans.

Another colleague suggested we tweak the prompt we gave GPT-3 to encourage it to use analogous inspiration, which primed the model to produce some truly wild ideas:

- Have “clowns” jump out of ATM's, making it less fun to withdraw money

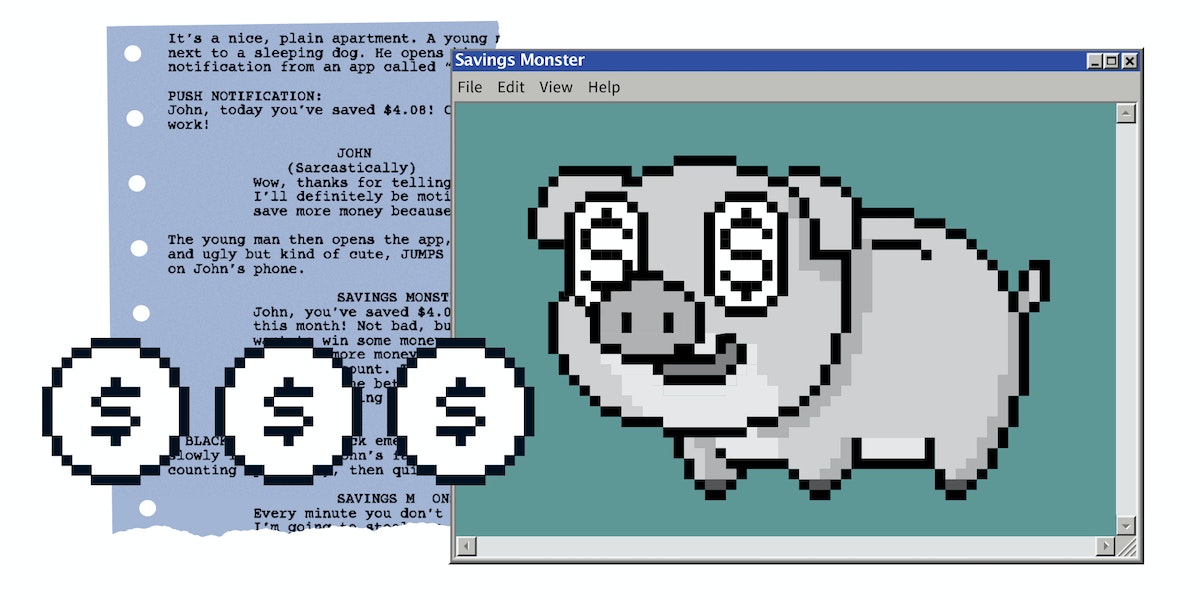

- Create a virtual reality experience where users are in an arena full of savings monsters, and need to slay them by depositing money into savings

- Create a “Savings Monster” similar to those featured on Chinese lottery shows where viewers have to guess the amount saved in order to win money

“I'd love to know what a Savings Monster is,” I mused on Slack, and a colleague responded, “Why don't you ask it?” Of course! After the brainstorm, I started a Q&A session with the model (my prompt is in bold):

Q: Quick question: What exactly is a savings monster? You mentioned it twice.

A: I'm glad you asked! This was just an idea that emerged from the brainstorm. A savings monster is a physical manifestation of your savings goal. For example, let's say I want to save $500 by the end of next year. If I deposit $10 every day, that will get me pretty close to my goal at the end of the year, but what happens if one day I don't have $10? Now my savings monster is underfed, and he starts to throw a tantrum (eg. negative cash flow notification).

A: Yes, this is an idea inspired by Chinese lottery shows (and World War Z). We thought it might be fun for users to have a physical manifestation of their goals that they could feed (ie. save more money) when they meet their goals, and starve (ie. spend more money) when they fall short. In order for this idea to work however, the savings monster would have to be very cute, and a bit terrifying.

As it turns out, the model doesn't skip a beat when you change up the input’s format. So I decided to try my luck: In my next prompt, I asked GPT-3 to choose its favorite idea from the brainstorm and create a script for a promo video. Thankfully my virtual brainstorm partner selected the Savings Monster concept. The resulting script was so good that I found an animator to bring it to life.

So what is this good for?

As exciting as this technology is, GPT-3 is not Artificial General Intelligence, and it was interesting to find the edges of its abilities. For example, this model can easily summarize a news article, or turn it into a fairy tale. But it's surprisingly difficult to get it to remove references to a specific person, or change the subject of a certain paragraph.

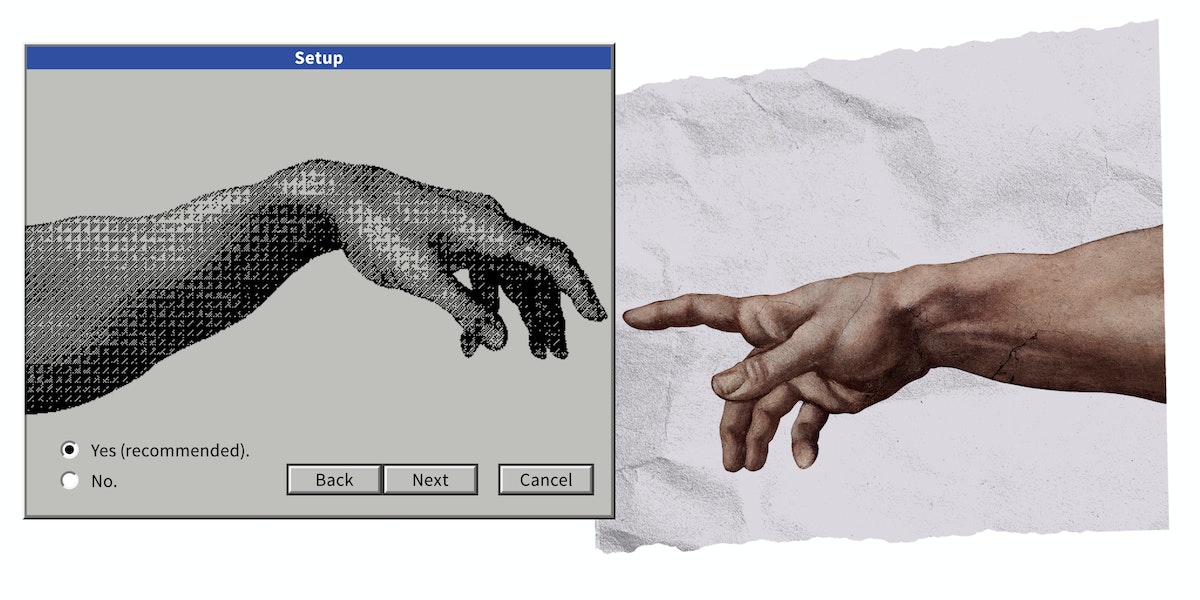

To put this in human-centered design terms, these large transformers are an extremely valuable way to augment the team during divergent moments in a project, when we're creating choices. The tech struggles, however, in convergent moments, when we're making choices. Thankfully, humans are still required to synthesize massive amounts of information about human needs into concrete human-centered design decisions.

Gotchas

There are two other issues we have to confront when considering transformer models for our prototypes and design recommendations: toxicity and bias. These models are trained on big chunks of the internet, and optimized to produce output that looks like the input. The result is that the model can reproduce the language of a scientific paper just as easily as it can mimic an internet troll. You get the good with the bad.

My experiments sparked a conversation with Jess Freaner, an IDEO Data Scientist with a special interest in ethics and AI. “Though the rules governing how it all works might not be explicitly written on the wall,” Jess told me, “being a product of our world as it exists now and in our past (the internet is an excellent, incomplete archive) we need to consider what GPT-3 reinforces and when it can reveal what needs to be subverted about society.”

Problems with toxicity and bias are not unique to GPT-3. OpenAI is working on both—they have built-in tools to manually and automatically flag output as toxic. They’re also working on the issue of bias, but it’s a thornier problem. These models literally encode a snapshot in time, and are inherently biased to reproduce the status quo. Companies and designers will need to question and problematize the output of these models with the same rigor that we are learning to bring to our human interactions.

Future possibilities

Despite the challenges, it's clear that in the past 12 months, natural language models have crossed an invisible threshold of usefulness, in the same way that speech recognition was frustratingly terrible for decades—until one day it wasn't. Early proof of concept prototypes demonstrate how this type of model can transform a description of an interface into functional UI code. Talk about “declarative programming.” Imagine a future in which the job of a UI designer is to write a description of the interface rather than laying out each button and label by hand. The same description might result in totally different visual expressions or interactions depending on the individual user's needs, interests, or current context.

As transformer models improve, I'm inspired by a future where people are literally in conversation with their software. Users might be able to easily adapt the same app to become a widget in their AR glasses, a quirky voice assistant, or an animated character. Because who knows: for some people, the perfect solution just might be a Savings Monster.

Illustrations by Katie Kirk

Subscribe to the IDEO newsletter

.jpg)